上周四,在舊金山舉辦的首屆開發(fā)者大會上,,人工智能初創(chuàng)公司Anthropic發(fā)布了最新一代“前沿”或尖端人工智能模型Claude Opus 4和Claude Sonnet 4,。這家估值超610億美元的公司在一篇博文中表示,備受期待的新模型Opus是“全球最佳編碼模型”,,能夠“在需要持續(xù)專注且涉及數(shù)千步驟的長期任務(wù)中保持穩(wěn)定性能”,。由新模型驅(qū)動的人工智能代理可對數(shù)千個數(shù)據(jù)源展開分析,,并執(zhí)行復(fù)雜操作。

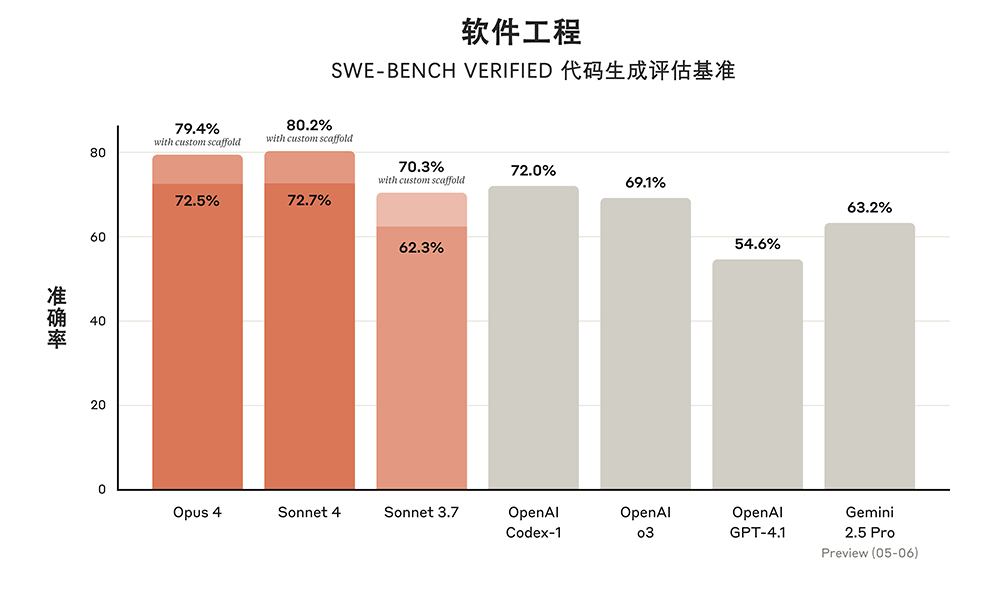

此次發(fā)布凸顯了科技公司在“全球最先進(jìn)人工智能模型”領(lǐng)域的角逐之激烈——尤其在軟件工程等領(lǐng)域——各企業(yè)紛紛采用新技術(shù)來提升速度與效率,,谷歌上周推出的實驗性研究模型Gemini Diffusion便是例證,。在一項對比不同大型語言模型軟件工程任務(wù)表現(xiàn)的基準(zhǔn)測試中,Anthropic的兩款模型擊敗了OpenAI的最新模型,,而谷歌的最佳模型則表現(xiàn)落后,。

部分早期測試者已通過實際任務(wù)體驗新模型。該公司舉例稱,,購物獎勵公司樂天株式會社(Rakuten)的人工智能總經(jīng)理表示,,Opus 4在部署到一個復(fù)雜項目后“自主編碼近七小時”。

Anthropic技術(shù)團(tuán)隊成員黛安·佩恩(Dianne Penn)告訴《財富》雜志:“這實際上是人工智能系統(tǒng)能力的重大飛躍,?!庇绕涫钱?dāng)模型從“助手”角色升級為“代理”(即能自主為用戶執(zhí)行任務(wù)的虛擬協(xié)作者)時。

她補(bǔ)充道,,Claude Opus 4 增添了若干新功能,,例如能更精準(zhǔn)地執(zhí)行指令,且在“記憶”能力上實現(xiàn)了提升,。佩恩提到,從過往情況來看,,這些系統(tǒng)難以記住所有歷史操作,,但此次“特意開發(fā)了長期任務(wù)感知能力”。該模型借助類似文件系統(tǒng)的機(jī)制來追蹤進(jìn)度,,并策略性地調(diào)用記憶數(shù)據(jù)以規(guī)劃后續(xù)步驟,,如同人類會依據(jù)現(xiàn)實狀況調(diào)整計劃與策略。

兩款模型均可在推理與工具調(diào)用(如網(wǎng)頁搜索)之間切換,,還能同時使用多種工具(如同步搜索網(wǎng)頁并運行代碼測試),。

Anthropic人工智能平臺產(chǎn)品負(fù)責(zé)人邁克爾·格斯特恩哈伯(Michael Gerstenhaber)表示:“我們確實視此為一場向巔峰進(jìn)發(fā)的競賽。我們希望確保人工智能能造福所有人,,因此要給所有實驗室施加壓力,,促使其以安全的方式推動人工智能發(fā)展?!彼忉尫Q,,這包括展示公司自身的安全標(biāo)準(zhǔn)。

Claude 4 Opus所推出的安全協(xié)議,,其嚴(yán)格程度遠(yuǎn)超以往任何一款A(yù)nthropic模型,。該公司的《負(fù)責(zé)任擴(kuò)展政策》(RSP)作為一項公開承諾,最初于2023年9月發(fā)布,,其中明確規(guī)定:“除非實施可將風(fēng)險控制在可接受范圍內(nèi)的安全與保障措施,,否則不會訓(xùn)練或部署可能引發(fā)災(zāi)難性傷害的模型,。”Anthropic由OpenAI前員工于2021年創(chuàng)立,,他們擔(dān)憂OpenAI過于追求速度與規(guī)模,,而忽略了安全與治理。

2024年10月,,該公司對《負(fù)責(zé)任擴(kuò)展政策》進(jìn)行更新,,采用“更為靈活且細(xì)致的方法來評估和管理人工智能風(fēng)險”,同時堅持承諾,,即除非已實施充分的保障措施,,否則不會訓(xùn)練或部署模型?!?/p>

截至目前,,Anthropic的所有模型均依照其《負(fù)責(zé)任擴(kuò)展政策》被歸為人工智能安全等級2(以下簡稱ASL-2),該等級“為人工智能模型設(shè)定了安全部署與模型安全的基礎(chǔ)標(biāo)準(zhǔn)”,。Anthropic發(fā)言人表示,,公司并未排除新模型 Claude Opus 4達(dá)到ASL-2門檻的可能性,不過,,公司正積極依據(jù)更為嚴(yán)格的ASL-3安全標(biāo)準(zhǔn)推出該模型——該標(biāo)準(zhǔn)要求強(qiáng)化防范模型被盜用和濫用的保護(hù)措施,,涵蓋構(gòu)建更強(qiáng)大的防御機(jī)制,以杜絕有害信息泄露或防止對模型內(nèi)部“權(quán)重”的訪問,。

根據(jù)Anthropic的《負(fù)責(zé)任擴(kuò)展政策》,,被歸入該公司第三安全等級的模型達(dá)到了更為危險的能力閾值,其功能強(qiáng)大到足以構(gòu)成重大風(fēng)險,,比如協(xié)助武器開發(fā)或?qū)崿F(xiàn)人工智能研發(fā)自動化,。Anthropic證實,Opus 4無需最高等級的保護(hù)措施,,即ASL-4,。

Anthropic的一位發(fā)言人表示:“我們在推出上一款模型Claude 3.7 Sonnet時,便已預(yù)料到可能會采取此類措施,。當(dāng)時我們認(rèn)定該模型無需遵循ASL-3等級的保護(hù)措施,。但我們也承認(rèn),鑒于技術(shù)進(jìn)步之迅速,,不久的將來,,模型可能需要更嚴(yán)格的保護(hù)措施?!?/p>

在Claude 4 Opus即將發(fā)布之際,,她解釋稱,Anthropic主動決定依據(jù)ASL-3標(biāo)準(zhǔn)推出該產(chǎn)品?!按伺e使我們能在需求產(chǎn)生之前,,專注于開發(fā)、測試并完善這些保護(hù)措施,。依據(jù)我們的測試結(jié)果,,已排除該模型需要ASL-4等級保護(hù)措施的可能性?!辈贿^,,公司并未說明升級至ASL-3標(biāo)準(zhǔn)的具體觸發(fā)緣由。

Anthropic歷來會在產(chǎn)品發(fā)布之際,,同步推出模型或“系統(tǒng)卡片”,,提供有關(guān)模型能力及安全評估的詳細(xì)信息。佩恩向《財富》雜志透露,,Anthropic將在新推出Opus 4和Sonnet 4時發(fā)布對應(yīng)的模型卡片,,發(fā)言人也證實卡片會與模型一同發(fā)布。

近期,,OpenAI和谷歌等公司均推遲發(fā)布模型卡片,。今年4月,OpenAI因在發(fā)布GPT-4.1模型時未附帶模型卡片而遭受批評,,該公司稱該模型并非“前沿”模型,,無需提供卡片。今年3月,,谷歌在Gemini 2.5 Pro發(fā)布數(shù)周后才公布其模型卡片,,人工智能治理專家批評其內(nèi)容“貧乏”且“令人擔(dān)憂”。(財富中文網(wǎng))

譯者:中慧言-王芳

上周四,,在舊金山舉辦的首屆開發(fā)者大會上,人工智能初創(chuàng)公司Anthropic發(fā)布了最新一代“前沿”或尖端人工智能模型Claude Opus 4和Claude Sonnet 4,。這家估值超610億美元的公司在一篇博文中表示,,備受期待的新模型Opus是“全球最佳編碼模型”,能夠“在需要持續(xù)專注且涉及數(shù)千步驟的長期任務(wù)中保持穩(wěn)定性能”,。由新模型驅(qū)動的人工智能代理可對數(shù)千個數(shù)據(jù)源展開分析,,并執(zhí)行復(fù)雜操作。

此次發(fā)布凸顯了科技公司在“全球最先進(jìn)人工智能模型”領(lǐng)域的角逐之激烈——尤其在軟件工程等領(lǐng)域——各企業(yè)紛紛采用新技術(shù)來提升速度與效率,,谷歌上周推出的實驗性研究模型Gemini Diffusion便是例證,。在一項對比不同大型語言模型軟件工程任務(wù)表現(xiàn)的基準(zhǔn)測試中,Anthropic的兩款模型擊敗了OpenAI的最新模型,,而谷歌的最佳模型則表現(xiàn)落后,。

部分早期測試者已通過實際任務(wù)體驗新模型。該公司舉例稱,,購物獎勵公司樂天株式會社(Rakuten)的人工智能總經(jīng)理表示,,Opus 4在部署到一個復(fù)雜項目后“自主編碼近七小時”,。

Anthropic技術(shù)團(tuán)隊成員黛安·佩恩(Dianne Penn)告訴《財富》雜志:“這實際上是人工智能系統(tǒng)能力的重大飛躍?!庇绕涫钱?dāng)模型從“助手”角色升級為“代理”(即能自主為用戶執(zhí)行任務(wù)的虛擬協(xié)作者)時,。

她補(bǔ)充道,Claude Opus 4 增添了若干新功能,,例如能更精準(zhǔn)地執(zhí)行指令,,且在“記憶”能力上實現(xiàn)了提升。佩恩提到,,從過往情況來看,,這些系統(tǒng)難以記住所有歷史操作,但此次“特意開發(fā)了長期任務(wù)感知能力”,。該模型借助類似文件系統(tǒng)的機(jī)制來追蹤進(jìn)度,,并策略性地調(diào)用記憶數(shù)據(jù)以規(guī)劃后續(xù)步驟,如同人類會依據(jù)現(xiàn)實狀況調(diào)整計劃與策略,。

兩款模型均可在推理與工具調(diào)用(如網(wǎng)頁搜索)之間切換,,還能同時使用多種工具(如同步搜索網(wǎng)頁并運行代碼測試)。

Anthropic人工智能平臺產(chǎn)品負(fù)責(zé)人邁克爾·格斯特恩哈伯(Michael Gerstenhaber)表示:“我們確實視此為一場向巔峰進(jìn)發(fā)的競賽,。我們希望確保人工智能能造福所有人,,因此要給所有實驗室施加壓力,促使其以安全的方式推動人工智能發(fā)展,?!彼忉尫Q,這包括展示公司自身的安全標(biāo)準(zhǔn),。

Claude 4 Opus所推出的安全協(xié)議,,其嚴(yán)格程度遠(yuǎn)超以往任何一款A(yù)nthropic模型。該公司的《負(fù)責(zé)任擴(kuò)展政策》(RSP)作為一項公開承諾,,最初于2023年9月發(fā)布,,其中明確規(guī)定:“除非實施可將風(fēng)險控制在可接受范圍內(nèi)的安全與保障措施,否則不會訓(xùn)練或部署可能引發(fā)災(zāi)難性傷害的模型,?!盇nthropic由OpenAI前員工于2021年創(chuàng)立,他們擔(dān)憂OpenAI過于追求速度與規(guī)模,,而忽略了安全與治理,。

2024年10月,該公司對《負(fù)責(zé)任擴(kuò)展政策》進(jìn)行更新,,采用“更為靈活且細(xì)致的方法來評估和管理人工智能風(fēng)險”,,同時堅持承諾,即除非已實施充分的保障措施,否則不會訓(xùn)練或部署模型,?!?/p>

截至目前,Anthropic的所有模型均依照其《負(fù)責(zé)任擴(kuò)展政策》被歸為人工智能安全等級2(以下簡稱ASL-2),,該等級“為人工智能模型設(shè)定了安全部署與模型安全的基礎(chǔ)標(biāo)準(zhǔn)”,。Anthropic發(fā)言人表示,公司并未排除新模型 Claude Opus 4達(dá)到ASL-2門檻的可能性,,不過,,公司正積極依據(jù)更為嚴(yán)格的ASL-3安全標(biāo)準(zhǔn)推出該模型——該標(biāo)準(zhǔn)要求強(qiáng)化防范模型被盜用和濫用的保護(hù)措施,涵蓋構(gòu)建更強(qiáng)大的防御機(jī)制,,以杜絕有害信息泄露或防止對模型內(nèi)部“權(quán)重”的訪問,。

根據(jù)Anthropic的《負(fù)責(zé)任擴(kuò)展政策》,被歸入該公司第三安全等級的模型達(dá)到了更為危險的能力閾值,,其功能強(qiáng)大到足以構(gòu)成重大風(fēng)險,,比如協(xié)助武器開發(fā)或?qū)崿F(xiàn)人工智能研發(fā)自動化。Anthropic證實,,Opus 4無需最高等級的保護(hù)措施,,即ASL-4。

Anthropic的一位發(fā)言人表示:“我們在推出上一款模型Claude 3.7 Sonnet時,,便已預(yù)料到可能會采取此類措施,。當(dāng)時我們認(rèn)定該模型無需遵循ASL-3等級的保護(hù)措施。但我們也承認(rèn),,鑒于技術(shù)進(jìn)步之迅速,,不久的將來,模型可能需要更嚴(yán)格的保護(hù)措施,?!?/p>

在Claude 4 Opus即將發(fā)布之際,她解釋稱,,Anthropic主動決定依據(jù)ASL-3標(biāo)準(zhǔn)推出該產(chǎn)品,。“此舉使我們能在需求產(chǎn)生之前,,專注于開發(fā)、測試并完善這些保護(hù)措施,。依據(jù)我們的測試結(jié)果,,已排除該模型需要ASL-4等級保護(hù)措施的可能性?!辈贿^,,公司并未說明升級至ASL-3標(biāo)準(zhǔn)的具體觸發(fā)緣由。

Anthropic歷來會在產(chǎn)品發(fā)布之際,同步推出模型或“系統(tǒng)卡片”,,提供有關(guān)模型能力及安全評估的詳細(xì)信息,。佩恩向《財富》雜志透露,Anthropic將在新推出Opus 4和Sonnet 4時發(fā)布對應(yīng)的模型卡片,,發(fā)言人也證實卡片會與模型一同發(fā)布,。

近期,OpenAI和谷歌等公司均推遲發(fā)布模型卡片,。今年4月,,OpenAI因在發(fā)布GPT-4.1模型時未附帶模型卡片而遭受批評,該公司稱該模型并非“前沿”模型,,無需提供卡片,。今年3月,谷歌在Gemini 2.5 Pro發(fā)布數(shù)周后才公布其模型卡片,,人工智能治理專家批評其內(nèi)容“貧乏”且“令人擔(dān)憂”,。(財富中文網(wǎng))

譯者:中慧言-王芳

Anthropic unveiled its latest generation of “frontier,” or cutting-edge, AI models, Claude Opus 4 and Claude Sonnet 4, during its first conference for developers on Thursday in San Francisco. The AI startup, valued at over $61 billion, said in a blog post that the new, highly anticipated Opus model is “the world’s best coding model,” and “delivers sustained performance on long-running tasks that require focused effort and thousands of steps.” AI agents powered by the new models can analyze thousands of data sources and perform complex actions.

The new release underscores the fierce competition among companies racing to build the world’s most advanced AI models—especially in areas like software coding—and implement new techniques for speed and efficiency, as Google did this week with its experimental research model demo called Gemini Diffusion. On a benchmark comparing how well different large language models perform on software engineering tasks, Anthropic’s two models beat OpenAI’s latest models, while Google’s best model lagged behind.

Some early testers have already had access to the model to try it out in real-world tasks. In one example provided by the company, a general manager of AI at shopping rewards company Rakuten said Opus 4 “coded autonomously for nearly seven hours” after being deployed on a complex project.

Dianne Penn, a member of Anthropic’s technical staff, told Fortune that “this is actually a very large change and leap in terms of what these AI systems can do,” particularly as the models advance from serving as “copilots,” or assistants, to “agents,” or virtual collaborators that can work autonomously on behalf of the user.

Claude Opus 4 has some new capabilities, she added, including following instructions more precisely and improvement in its “memory” capabilities. Historically, these systems don’t remember everything they’ve done before, said Penn, but “we were deliberate to be able to unlock long-term task awareness.” The model uses a file system of sorts to keep track of progress, and then strategically checks on what’s stored in memory in order to take on additional next steps—just as a human changes its plans and strategies based on real-world situations.

Both models can alternate between reasoning and using tools like web search, and they can also use multiple tools at once—like searching the web and running a code test.

“We really see this is a race to the top,” said Michael Gerstenhaber, AI platform product lead at Anthropic. “We want to make sure that AI improves for everybody, that we are putting pressure on all the labs to increase that in a safe way.” That includes exhibiting the company’s own safety standards, he explained.

Claude 4 Opus is launching with stricter safety protocols than any previous Anthropic model. The company’s Responsible Scaling Policy (RSP) is a public commitment that was originally released in September 2023 and maintained that Anthropic would not “train or deploy models capable of causing catastrophic harm unless we have implemented safety and security measures that will keep risks below acceptable levels.” Anthropic was founded in 2021 by former OpenAI employees who were concerned that OpenAI was prioritizing speed and scale over safety and governance.

In October 2024, the company updated its RSP with a “more flexible and nuanced approach to assessing and managing AI risks while maintaining our commitment not to train or deploy models unless we have implemented adequate safeguards.”

Until now, Anthropic’s models have all been classified under an AI Safety Level 2 (ASL-2) under the company’s Responsible Scaling Policy, which “provide a baseline level of safe deployment and model security for AI models.” While an Anthropic spokesperson said the company hasn’t ruled out that its new Claude Opus 4 could meet the ASL-2 threshold, it is proactively launching the model under the stricter ASL-3 safety standard—requiring enhanced protections against model theft and misuse, including stronger defenses to prevent the release of harmful information or access to the model’s internal “weights.”

Models that are categorized in Anthropic’s third safety level meet more dangerous capability thresholds, according to the company’s responsible scaling policy, and are powerful enough to pose significant risks such as aiding in the development of weapons or automating AI R&D. Anthropic confirmed that Opus 4 does not require the highest level of protections, categorized as ASL-4.

“We anticipated that we might do this when we launched our last model, Claude 3.7 Sonnet,” said the Anthropic spokesperson. “In that case, we determined that the model did not require the protections of the ASL-3 Standard. But we acknowledged the very real possibility that given the pace of progress, near future models might warrant these enhanced measures.”

In the lead up to releasing Claude 4 Opus, she explained, Anthropic proactively decided to launch it under the ASL-3 Standard. “This approach allowed us to focus on developing, testing, and refining these protections before we needed. We’ve ruled out that the model requires ASL-4 safeguards based on our testing.” Anthropic did not say what triggered the decision to move to ASL-3.

Anthropic has also always released model, or system, cards with its launches, which provide detailed information on the models’ capabilities and safety evaluations. Penn told Fortune that Anthropic would be releasing a model card with its new launch of Opus 4 and Sonnet 4, and a spokesperson confirmed it would be released when the model launches today.

Recently, companies including OpenAI and Google have delayed releasing model cards. In April, OpenAI was criticized for releasing its GPT-4.1 model without a model card because the company said it was not a “frontier” model and did not require one. And in March, Google published its Gemini 2.5 Pro model card weeks after the model’s release, and an AI governance expert criticized it as “meager” and “worrisome.”